Hi, today we'll going to learn about computer system organization & Processors so, are you ready for it.....................you will be.

Computer System Organization

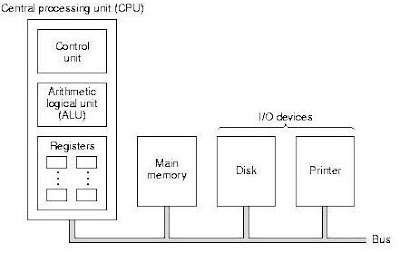

A digital computer consists of an interconnected system of processors, memories, and (I/O) input/output devices, This lecture is an introduction to these three components and to their interconnection, as background for the detailed examination of specific levels in the five succeeding lectures. Processors, memories, and (I/O) input/output are key concepts and will be present at every level, so we'll start our study of computer architecture by looking at all three in turn.

Processors

The organization of a simple bus-oriented computer is shown in Picture below. The CPU (Central Processing Unit) is the ‘‘brain’’ of the computer. Its function is to execute programs stored in the main memory by fetching their instructions, examining them, and then executing them one after another. The components are connected by a bus, which is a collection of parallel wires for transmitting address, data, and control signals. Buses can be external to the CPU, connecting it to memory and I/O devices, but also internal to the CPU, as we will see shortly.

The CPU is composed of several distinct parts. The control unit is responsible for fetching instructions from main memory and determining their type. The arithmetic logic unit performs operations such as addition and Boolean AND needed to carry out the instructions.

The CPU also contains a small, high-speed memory used to store temporary results and certain control information. This memory is made up of a number of registers, each of which has a certain size and function. Usually, all the registers have the same size. Each register can hold one number, up to some maximum determined by the size of the register. Registers can be read and written at high speed since they are internal to the CPU.

Registers

The most important register is the Program Counter (PC), which points to the next instruction to be fetched for execution. ( The name ‘‘program counter’’ is somewhat misleading because it has nothing to do with counting anything, but the term is universally used. Also important is the Instruction Register (IR), which holds the instruction currently being executed. ( Most computers have numerous other registers as well, some of them general purpose as well as some for specific purposes.

CPU Organization

The internal organization of part of a typical von Neumann CPU is shown right side picture in more detail This part is called the data path and consists of the registers

(typically 1 to 32), the ALU (Arithmetic Logic Unit), and several buses connecting the pieces, The registers feed into two ALU input registers, labeled A and B in the pic. These registers hold the ALU input while the ALU is performing some computation.

ALU(Arithmetic logical unit)

The ALU itself performs addition, subtraction, and other simple operations on its inputs, thus yielding a result in the output register. This output register can be stored back into a register. Later on, the register can be written (i.e., stored) into memory, if desired. Not all designs have the A, B, and output registers. Most instructions can be divided into one of two categories: register-memory or register-register. Register-memory instructions allow memory words to be

fetched into registers, where they can be used as ALU inputs in subsequent instructions, for example. (‘‘Words’’ are the units of data moved between memory and registers. A word might be an integer. We will discuss memory organization later in next lecture.) Other register-memory instructions allow registers to be stored back into memory, The other kind of instruction is register-register. A typical register register instruction fetches two operands from the registers, brings them to the ALU input registers, performs some operation on them, for example, addition or Boolean AND, and stores the result back in one of the registers. The process of running two operands through the ALU and storing the result is called the data path cycle and is the heart of most CPUs. To a considerable extent, it defines what the machine can do.

The faster the data path cycle is, the faster the machine runs.

Instruction Execution

The CPU executes each instruction in a series of small steps. Roughly speaking, the steps are as follows:

1- Fetch the next instruction from memory into the instruction register.

2- Change the program counter to point to the following instruction.

3- Determine the type of instruction just fetched.

4- If the instruction uses a word in memory, determine where it is.

5- Fetch the word, if needed, into a CPU register.

6- Execute the instruction.

RISC vs CISC

In 1980, a group at Berkeley led by David Patterson and Carlo Se´quin began designing VLSI CPU chips that did not use interpretation (Patterson, 1985 Patterson and Se´quin, 1982), They coined the term RISC for this concept and named their CPU chip the

"RISC I CPU" followed shortly by the "RISC II".

At the time these simple processors were being first designed, the characteristic that caught everyone’s attention was the relatively small number of instructions available, typically around 50. This number was far smaller than the 200 to 300 on established computers such as the DEC VAX and the large IBM mainframes. In fact, the acronym RISC stands for Reduced Instruction Set Computer, which was contrasted with CISC, which stands for Complex Instruction Set Computer (a thinly-veiled reference to the VAX, which dominated university Computer Science Departments at the time). Nowadays few people think that the size of the instruction set is a major issue, but the name stuck.

To make a long story short, a great religious war ensued, with the RISC supporters attacking the established order (VAX, Intel, large IBM mainframes). They claimed that the best way to design a computer was to have a small number of simple instructions that execute in one cycle of the data path. namely, fetching two registers, combining them somehow (e.g., adding or ANDing them), and storing the result back in a register, Their argument was that even if a RISC machine takes four or five instructions to do what a CISC machine does in one instruction, if the RISC instructions are 10 times as fast (because they are not interpreted), RISC wins. It is also worth pointing out that by this time the speed of main memories had caught up to the speed of read-only control stores, so the interpretation penalty had greatly increased, strongly favoring RISC machines. One might think that given the performance advantages of RISC technology, RISC machines (such as the Sun UltraSPARC) would have mowed over CISC machines (such as the Intel Pentium) in the marketplace. Nothing like this has happened. Why not?

First of all, there is the issue of backward compatibility and the billions of dollars companies have invested in software for the Intel line. Second, surprisingly, Intel has been able to employ the same ideas even in a CISC architecture. CPUs The net result is that common instructions are fast and less common instructions are slow. While this hybrid approach is not as fast as a pure RISC design, it gives competitive overall performance while still allowing old software to run unmodified.

Note: (for more details visit this site).

Computer System Organization

A digital computer consists of an interconnected system of processors, memories, and (I/O) input/output devices, This lecture is an introduction to these three components and to their interconnection, as background for the detailed examination of specific levels in the five succeeding lectures. Processors, memories, and (I/O) input/output are key concepts and will be present at every level, so we'll start our study of computer architecture by looking at all three in turn.

Processors

The organization of a simple bus-oriented computer is shown in Picture below. The CPU (Central Processing Unit) is the ‘‘brain’’ of the computer. Its function is to execute programs stored in the main memory by fetching their instructions, examining them, and then executing them one after another. The components are connected by a bus, which is a collection of parallel wires for transmitting address, data, and control signals. Buses can be external to the CPU, connecting it to memory and I/O devices, but also internal to the CPU, as we will see shortly.

The CPU is composed of several distinct parts. The control unit is responsible for fetching instructions from main memory and determining their type. The arithmetic logic unit performs operations such as addition and Boolean AND needed to carry out the instructions.

The CPU also contains a small, high-speed memory used to store temporary results and certain control information. This memory is made up of a number of registers, each of which has a certain size and function. Usually, all the registers have the same size. Each register can hold one number, up to some maximum determined by the size of the register. Registers can be read and written at high speed since they are internal to the CPU.

Registers

The most important register is the Program Counter (PC), which points to the next instruction to be fetched for execution. ( The name ‘‘program counter’’ is somewhat misleading because it has nothing to do with counting anything, but the term is universally used. Also important is the Instruction Register (IR), which holds the instruction currently being executed. ( Most computers have numerous other registers as well, some of them general purpose as well as some for specific purposes.

CPU Organization

|

| The data path of a typical von Neumann machine |

The internal organization of part of a typical von Neumann CPU is shown right side picture in more detail This part is called the data path and consists of the registers

(typically 1 to 32), the ALU (Arithmetic Logic Unit), and several buses connecting the pieces, The registers feed into two ALU input registers, labeled A and B in the pic. These registers hold the ALU input while the ALU is performing some computation.

ALU(Arithmetic logical unit)

The ALU itself performs addition, subtraction, and other simple operations on its inputs, thus yielding a result in the output register. This output register can be stored back into a register. Later on, the register can be written (i.e., stored) into memory, if desired. Not all designs have the A, B, and output registers. Most instructions can be divided into one of two categories: register-memory or register-register. Register-memory instructions allow memory words to be

fetched into registers, where they can be used as ALU inputs in subsequent instructions, for example. (‘‘Words’’ are the units of data moved between memory and registers. A word might be an integer. We will discuss memory organization later in next lecture.) Other register-memory instructions allow registers to be stored back into memory, The other kind of instruction is register-register. A typical register register instruction fetches two operands from the registers, brings them to the ALU input registers, performs some operation on them, for example, addition or Boolean AND, and stores the result back in one of the registers. The process of running two operands through the ALU and storing the result is called the data path cycle and is the heart of most CPUs. To a considerable extent, it defines what the machine can do.

The faster the data path cycle is, the faster the machine runs.

Instruction Execution

The CPU executes each instruction in a series of small steps. Roughly speaking, the steps are as follows:

1- Fetch the next instruction from memory into the instruction register.

2- Change the program counter to point to the following instruction.

3- Determine the type of instruction just fetched.

4- If the instruction uses a word in memory, determine where it is.

5- Fetch the word, if needed, into a CPU register.

6- Execute the instruction.

RISC vs CISC

In 1980, a group at Berkeley led by David Patterson and Carlo Se´quin began designing VLSI CPU chips that did not use interpretation (Patterson, 1985 Patterson and Se´quin, 1982), They coined the term RISC for this concept and named their CPU chip the

"RISC I CPU" followed shortly by the "RISC II".

At the time these simple processors were being first designed, the characteristic that caught everyone’s attention was the relatively small number of instructions available, typically around 50. This number was far smaller than the 200 to 300 on established computers such as the DEC VAX and the large IBM mainframes. In fact, the acronym RISC stands for Reduced Instruction Set Computer, which was contrasted with CISC, which stands for Complex Instruction Set Computer (a thinly-veiled reference to the VAX, which dominated university Computer Science Departments at the time). Nowadays few people think that the size of the instruction set is a major issue, but the name stuck.

To make a long story short, a great religious war ensued, with the RISC supporters attacking the established order (VAX, Intel, large IBM mainframes). They claimed that the best way to design a computer was to have a small number of simple instructions that execute in one cycle of the data path. namely, fetching two registers, combining them somehow (e.g., adding or ANDing them), and storing the result back in a register, Their argument was that even if a RISC machine takes four or five instructions to do what a CISC machine does in one instruction, if the RISC instructions are 10 times as fast (because they are not interpreted), RISC wins. It is also worth pointing out that by this time the speed of main memories had caught up to the speed of read-only control stores, so the interpretation penalty had greatly increased, strongly favoring RISC machines. One might think that given the performance advantages of RISC technology, RISC machines (such as the Sun UltraSPARC) would have mowed over CISC machines (such as the Intel Pentium) in the marketplace. Nothing like this has happened. Why not?

First of all, there is the issue of backward compatibility and the billions of dollars companies have invested in software for the Intel line. Second, surprisingly, Intel has been able to employ the same ideas even in a CISC architecture. CPUs The net result is that common instructions are fast and less common instructions are slow. While this hybrid approach is not as fast as a pure RISC design, it gives competitive overall performance while still allowing old software to run unmodified.

Note: (for more details visit this site).

Comments

Post a Comment